Algorithms can't prevent bias

Last Updated: Wed Oct 29 2025

https://themarkup.org/on-borrowed-time/2025/10/14/is-the-patient-black-check-this-box-for-yes

“That always raised a flag for me,” he says. “So, we’re different from the rest of the world? I got Black kidneys, and everybody else got white kidneys. It never set well with me.”

Craig Merrit

The story I linked above is a prime example of why every time I hear an AI Booster talk about the power for AI to reduce or eliminate bias in what ever field they’re talking about, I have a little chuckle.

Before we get into AI, lets have a look at the story.

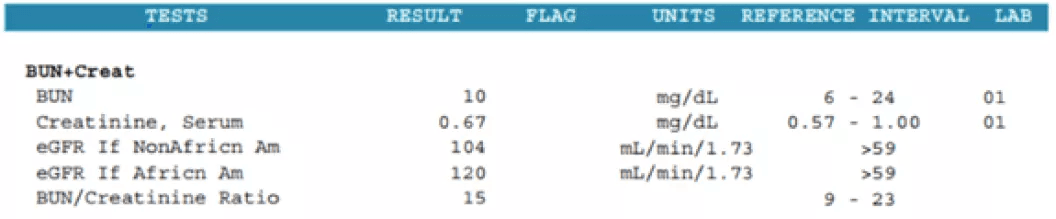

One of the ways that a doctor measures your kidney health is something called the eGFR. It stands for “estimated Glomerular Filtration Rate”, and it measures the levels of creatinine (a waste product) in your blood and combined with information about the your age, sex, it gives a good picture of how well your kidney is functioning. The higher the score, the better your kidney is functioning.

So far so good.

Except back in the late 90’s someone decided that because African Americans tend to have higher levels of creatinine in their blood on average, they needed to account for that in the score. So they added a second score specifically for African Americans that had a buffer in it, boosting it higher.

Who can see the problem here?

Yup that’s right, it meant that African Americans that were maybe borderline (and thus definitely needing intervention) under the normal test, were being shunted down the priority list because their adjusted score said they were fine.

It wasn’t until 2020/21 that the US medical establishment started to deal with the problem, in the mean time, many thousands of African Americans had suffered because the algorithm had prioritised others BY DESIGN.

AI of course would have done nothing to stop any of the above from happening, the bias was baked into the algorithm well before it got anywhere near any sort of automation.

This is the main issue, and it’s an issue that AI’s just CANNOT fix. We’ve seen time and time again that AI systems are prone to the biases inherent in their training data. If the training data contains a bias against African Americans, then decisions made by that AI system have the potential to show the same bias.

Remember when Grok went full white supremacist ?

Bias is a human problem and needs human solutions. This means we need to know what is going into the systems that are making decisions about us. It means that we need to be aware that because “The purpose of a system is what it does” we need to make sure our systems do the right thing.